I’ve been reviewing options for a new build controller that supports continuous integration in a Visual Studio 2015 development environment over the last few weeks, and now it’s time to wrap up and compare the two options I tested.

The two tools that I was able to successfully implement were Visual Studio Online and TeamCity 9.1.

Both solutions had their strong and weak points, and which you might choose will depend heavily on your precise needs and development environment.

Why Continuous Integration?

First, let’s examine why you would need a build server and CI in the first place.

Failing early

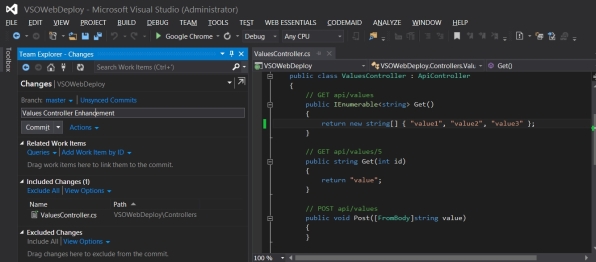

This is a really important factor when doing agile development. If you have a complex application, it’s inevitable that things will break from time to time. The earlier you know about it, the less effort it will take to fix.

Integration Testing

If your app is hosted in the cloud, you will want to test it in the real world. doing this manually is error prone and time consuming.

Automated deployment

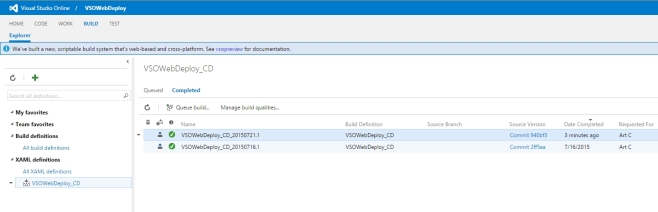

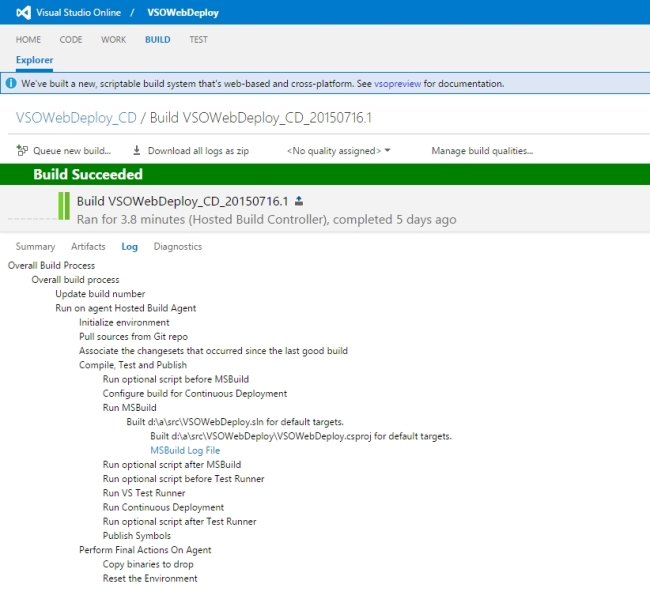

Same as above – deploying complex applications is error prone and time consuming. CI will automate this process

Code Coverage

You can run code coverage tools as part of your build, to make sure that any new code has an associated Unit Test.

Standard Workflow

CI tools can automate more than just the build – once the build is done and tested, the staging server can be backed up, and the new build deployed automatically.

Environment

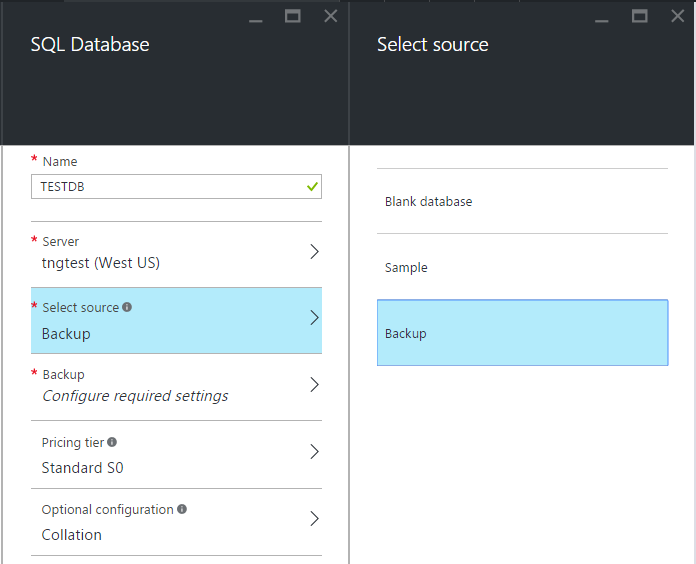

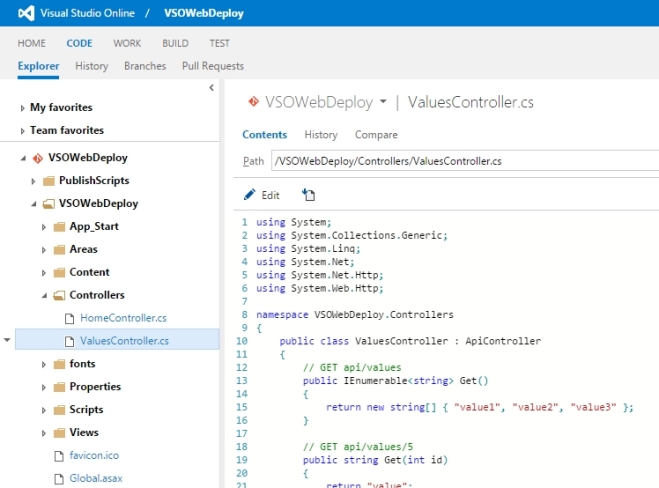

For the purpose of this comparison, the solution being developed was a web application containing multiple projects, each of which is hosted in it’s own Azure Web App or Web Job. The solution is implemented in Visual Studio 2015, though the front end is a Single Page Application developed in pure HTML/JavaScript.

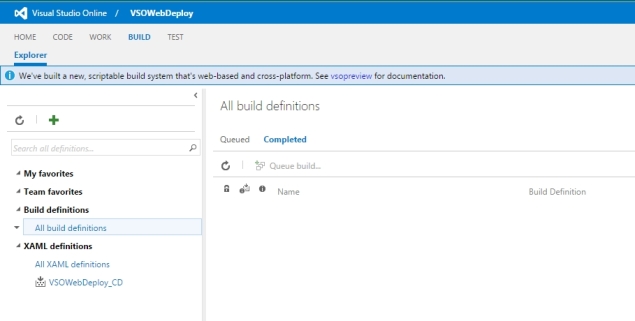

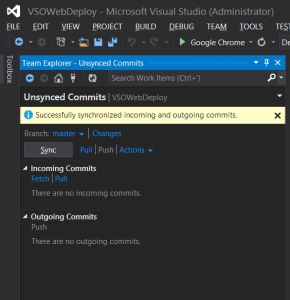

Setting up the build server

In terms of ease of use, both TeamCity and VSO were very easy to set up and configure, and worked out of the box with Visual Studio 2015. VSO has the edge here, though, as TeamCity requires a dedicated server, whereas VSO is a cloud solution. Another plus for VSO is that it has source control covered, so if you are really starting from scratch, VSO can be a complete solution that works out of the box. With TeamCity, you will need to set up your source control separately, though it supports a wide range of source control systems, and even more with plugins. If you have an existing source control server hosted internally, there’s every chance you can set up TeamCity to use it, whereas with VSO, you will probably end up using VSO’s source control for some part of the process. Git and TFVC are supported in VSO.

Security

VSO uses Microsoft Live accounts and/or Azure subscriptions to manage access to the entire Azure platform, including VSO, Azure hosting and source control. This integrated security can further be managed by syncing with Active Directory. This is a fantastic solution if you are already in an environment where each developer has a windows account and an MSDN subscription.

In contrast, TeamCity has individual user accounts, roles and groups that are specific to TeamCity. This means that managing users in TeamCity becomes an additional administrative task if (when) your team changes.

VSO also has better tools and support for larger development teams, given it’s integrated with the entire Microsoft ecosystem. The downside to VSO is that your source code will be stored on Microsoft’s servers, which can be a security issue in itself.

TeamCity is therefore the better solution if you don’t feel comfortable putting your source code in the cloud.

Features

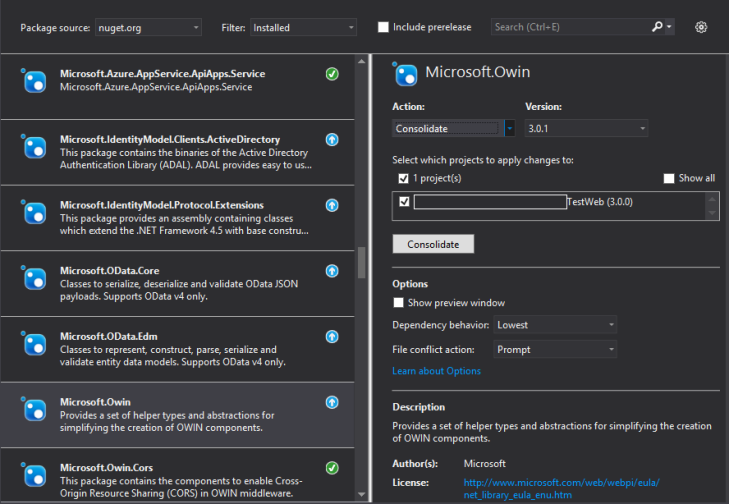

Both build servers support a huge set of common features related to managing builds, testing and deployment. If your builds are fairly standard, either solution will handle it well. You can set up build steps to call command line tools, manage Nuget packages, run unit tests, deploy to a web server and much, much more.

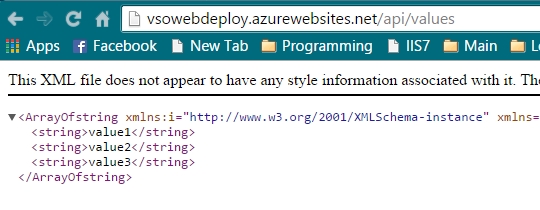

VSO includes support for a lot more environments, such as Android, iOS and, of course, extremely easy integration with Azure WebApps.

TeamCity can also support the same features, but a lot more work would be required to set up the necessary build agents.

If your needs are those of a typical web application developer, there are enough tools in each solution to handle all your needs. More complex applications are supported by both tools, but will require additional work.

One distinct advantage of VSO is that it comes fully integrated with a suite of tools to support very comprehensive development lifecycle management. This includes source control, defect tracking, QA processes, and project management. TeamCity, in contrast, does one thing very well, and has the advantage if you already have existing processes in place, and just want to add CI.

Usability

There’s not much between the two solutions here. Each one comes with it’s own quirks and perks. Neither product was difficult to use, but I did find that sometimes I spent far too much time looking for a particular option in VSO, mostly because VSO is a much more complex and comprehensive product. In contrast, setting up a project, monitoring builds and viewing statistics and results was dead simple in TeamCity.

It must be said that VSO looks a lot more modern and most of the features have a wow-factor compared to similar tools from other vendors. The Microsoft engineers and designers have done an excellent job to put together such a complex and usable piece of software.

Performance

TeamCity has to get the trophy here, as VSO can be a bit sluggish – both in terms of User Interface, and also the process of initiating a build. Most of this is due to the fact that TeamCity is hosted on the local network, and runs on a pretty decent dedicated server, but the reality is that you are unlikely to match the performance of a locally hosted tool with something that is hosted in the cloud.

Price

Often this is what the choice comes down to. In this case, again, it can be a little confusing in the case of VSO, as price depends on how invested you already are in Microsoft’s products. If you are a MSDN subscriber, you already have a reasonable amount of monthly credit to use Azure services, which includes the cost of making a build in VSO. There are no upfront cost, and instead you are paying for a subscription to a service, so costs will depend on how much you use it.

Overall, considering you don’t need to buy and maintain server infrastructure, the price is quite reasonable, and Microsoft are trying hard to make their own tools as attractive as possible.

Pricing for TeamCity is a lot more like a traditional piece of software. There are 2 versions available: Professional and Enterprise, and the good news is that Professional is completely free.

This version is sufficient for the needs of a small team of developers.

Additional projects and build agents can be added for around $300 each, and the Enterprise version at $1999 removes all restrictions on the number of projects and build agents. However, you will need to include the cost of hosting TeamCity and source control on your own servers, including patching, electricity, maintenance etc. There’s also an overhead for making sure that all the pieces work together, which is not the case in VSO.

Conclusion

It’s difficult to recommend one solution over another – they are both excellent. If you have an existing infrastructure, including servers, source control and a defect tracking system, and you want to continue using these,then TeamCity is probably going to integrate better into your existing infrastructure. On the other hand, if you have none of these things, and want to get up and running quickly, then VSO is going to start looking pretty good.

The only other thing of note is that VSO, being cloud based, will require a decent internet connection. Mostly, this is no longer an issue these days, but if you prefer the assurance that all the parts of your build and deployment process are hosted in-house, then you won’t be disappointed with the features in TeamCity.